RidgeSketch: A Fast Sketching Based Solver for Large Scale Ridge Regression (SIMAX 2022)

Abstract

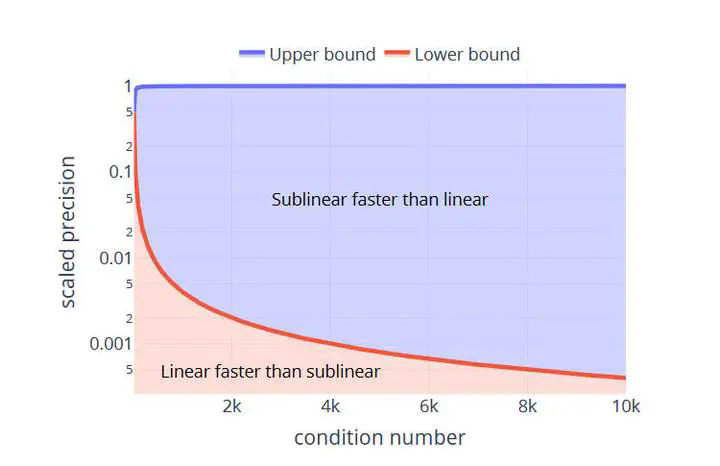

We propose new variants of the sketch-and-project method for solving large scale ridge regression problems. Firstly, we propose a new momentum alternative and provide a theorem showing it can speed up the convergence of sketch-and-project, through a fast sublinear convergence rate. We carefully delimit under what settings this new sublinear rate is faster than the previously known linear rate of convergence of sketch-and-project without momentum. Secondly, we consider combining the sketch-and-project method with new modern sketching methods such as the count sketch, subcount sketch (a new method we propose), and subsampled Hadamard transforms. We show experimentally that when combined with the sketch-and-project method, the (sub)count sketch is very effective on sparse data and the standard subsample sketch is effective on dense data. Indeed, we show that these sketching methods, combined with our new momentum scheme, result in methods that are competitive even when compared to the Conjugate Gradient method on real large scale data. On the contrary, we show the subsampled Hadamard transform does not perform well in this setting, despite the use of fast Hadamard transforms, and nor do recently proposed acceleration schemes work well in practice. To support all of our experimental findings, and invite the community to validate and extend our results, with this paper we are also releasing an open source software package, RidgeSketch. We designed this object-oriented package in Python for testing sketch-and-project methods and benchmarking ridge regression solvers. RidgeSketch is highly modular, and new sketching methods can easily be added as subclasses. We provide code snippets of our package in the appendix.